If you are migrating your workloads to the cloud, you most probably have come across in scenarios where you need to copy large amount of data cross the network into the cloud. In this post, I’m going to be talking about my experience in one of those scenarios and my observations. The observations and learning are interesting and worth sharing. Therefore, continue reading this article to the end.

Problem Statement

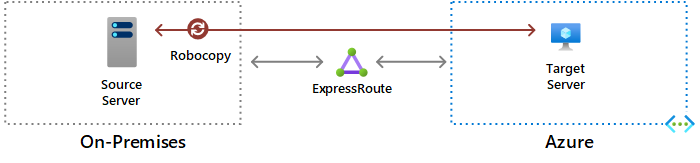

Recently, I was involved in a Server-to-Server data migration over the Azure ExpressRoute. This scenario had a high latency connection from the on-promises to the cloud; it was around 60ms route trip time.

I initially used Robocopy tool to migrate the files and wasn’t experiencing a great bandwidth consumption during the migration. I was able to achieve maximum 280Mbps even though I used multithread data copy using the robocopy option. I had plenty of capacity available in the ExpressRoute, however, robocopy was not pushing the data transfer speeds beyond this limit.

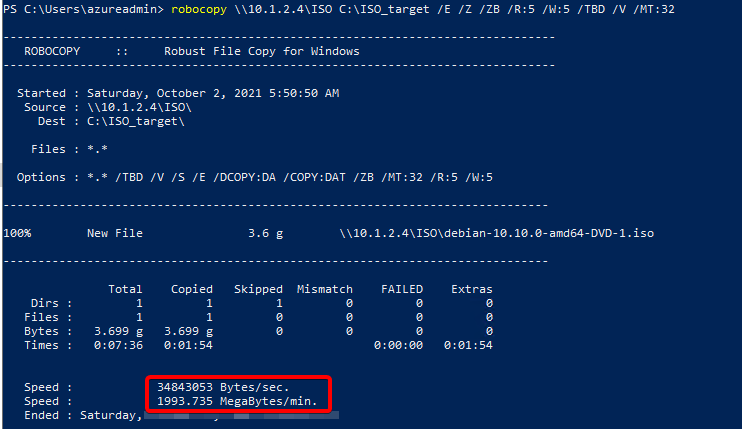

Following is an example small copy result.

As you can see, it was only achieving 265Mbps.

Investigation

While reading about the Robocopy, I learned, even if we use multi-threaded flag, with high latency data copy it doesn’t achieve a high speed because it uses SMB for the data transfer. In other words, Robocopy is not the best tool to copy data across high latency connections. The reason for that is SMB protocol is not designed to copy with high latency connections. There is a single TCP connection maximum bandwidth limit to be aware here has well. In Azure, there are some measured single session TCP maximum bandwidth limits documented here. This will give you a guide around the limits.

Moving on from Robocopy, I used FTP as another method for the copy job. I used FileZilla tool for this. This required an FTP server to be configured on the source side; and the data to be pulled from an FTP client at the target side. Note that this also required additional ports (port 21 by default) opened between the source server and the destination.

I managed to achieve slightly higher data transfer rates using this method, however, still it wasn’t utilizing the full ExpressRoute bandwidth. This resulted in me looking for other options.

Solution

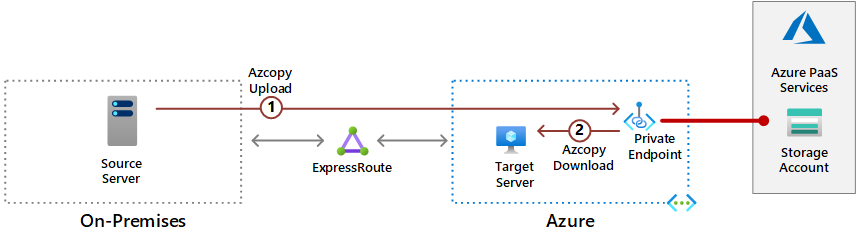

I initially looked at using azcopy tool for this job. However, I didn’t go with this path because it can only copy files to an Azure Storage account, e.g. Blob store. Because of the low maximum bandwidth achieved from the robocopy and FTP, I was compelled to investigate this method again. My requirement was to copy files from the source server to the destination server, therefore with azcopy, I had to (1) first upload the files to a staging storage account, then (2) download the files the destination server. Even though this is a two-step approach, this gave me faster throughputs during the copy. This resulted in a significantly quicker end to end completion time compared to the robocopy. The key point to mention here is, azcopy is designed for high latency copy jobs. Therefore, it pushes the throughputs to the maximum available in the link.

With the usage of the Azure Private endpoints, the route of the file transfer traffic, still stays the same. It copies the files over the ExpressRoute to the same virtual network. Therefore, from a security perspective, it’s not a huge compromise.

What is significant to note in here is the download speed from the storage account to the target server. Because they are in the same region, it managed to achieve throughputs as high as 10Gbps. Therefore, time taken in the step 2 is neglectable when compared to the step 1.

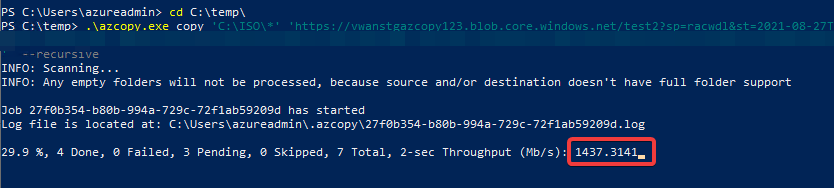

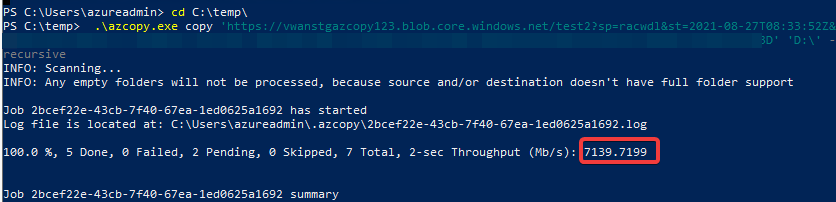

Following is an example upload speed achieved.

Following is an example download speed achieved.

Conclusion

The conclusion here is, direct copy using traditional methods (like robocopy) in high latency scenarios, especially migrating data to the cloud, is not always the best method. There are other tools specifically designed for high latency scenarios (like azcopy). Even though they can’t do server-to-server direct copy, they can perform better in a two-step approach to migrate the files to the destination/cloud.